Neural Encoding of Music and Speech

Investigating subcortical and cortical neural encoding of the acoustics of music and speech from human listeners

The first publication of this project is out!

Check out here:

Shan, T., Cappelloni, M.S. & Maddox, R.K. Subcortical responses to music and speech are alike while cortical responses diverge. Sci Rep 14, 789 (2024). https://doi.org/10.1038/s41598-023-50438-0 —

The first part of the project is to develop a robust method that can derive human subcortical response from continuous naturalistic music that has never been done successfully before. Explore and compare the subcortical encoding between music and speech using the new method.

Key Findings

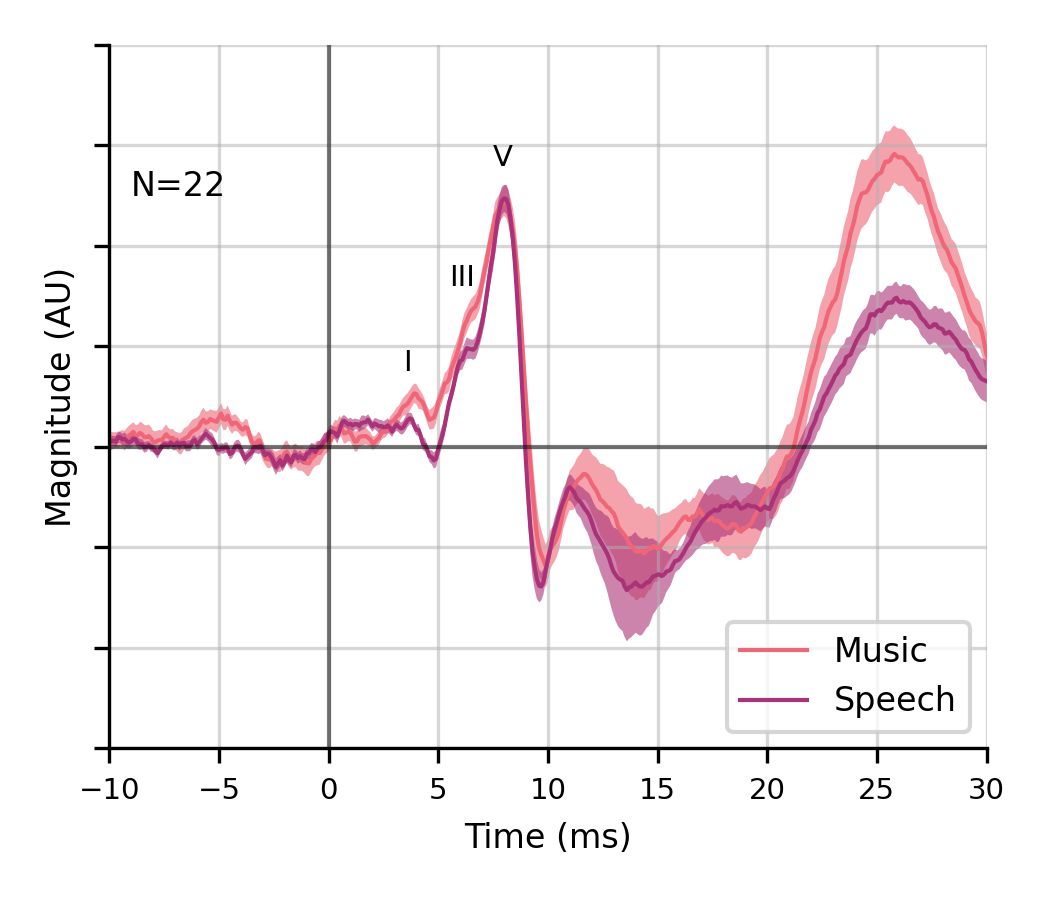

- We developed a novel method to derive human auditory brainstem response (ABR) to continuous naturalistic sounds, based on deconvolution paradigm. In our new method, we used a physiological regressor, the modeled auditory nerve firing rate from a detialed computational auditory periphery model.

- This method improve the current auditory brainstem response derivation a ton, and can be generalized to all different types of natural sounds.

- We used quantitative metrics such as the response SNR, prediction accuracy, and spectral coherence, to determine the reliability and robustness of our new developed method. Our new methods is also very efficient in time.

- With our new method, we found there is no appreciable impact of stimulus class (i.e., music vs speech) on the way stimulus acoustics are encoded subcortically. Our new analysis method also resulted in cortical music and speech responses becoming more similar but with remaining differences.

please refer to the publication for more details!

Data available at openneuro ;code available at github.

The second part of this project is to quantitatively compare our newly developed method with other method using deconvolution paradigm, where we aim to help guide decisions on what approach to choose when deriving ABRs from natural speech and other natural sounds.

The results is published in a conference poster but the final paper is coming soon!